Optimizing Quiz Generation: Structured Outputs & Smarter Model Choices

How we improved the quality and cost of quiz generation by using structured outputs and smarter model choices.

Posted by

Join our newsletter

Get the latest updates straight to your inbox.

SubscribeThe Desi Dictions team has focused on building out one of our new features: Quiz Generation. This is part of our broader effort to turn the Desi Dictions chatbot into a full-fledged AI tutor—capable of generating personalized lessons, flashcards, readings, homework, and now quizzes, all through a single conversational interface.

Why Quiz Generation Was Tricky (and Expensive)

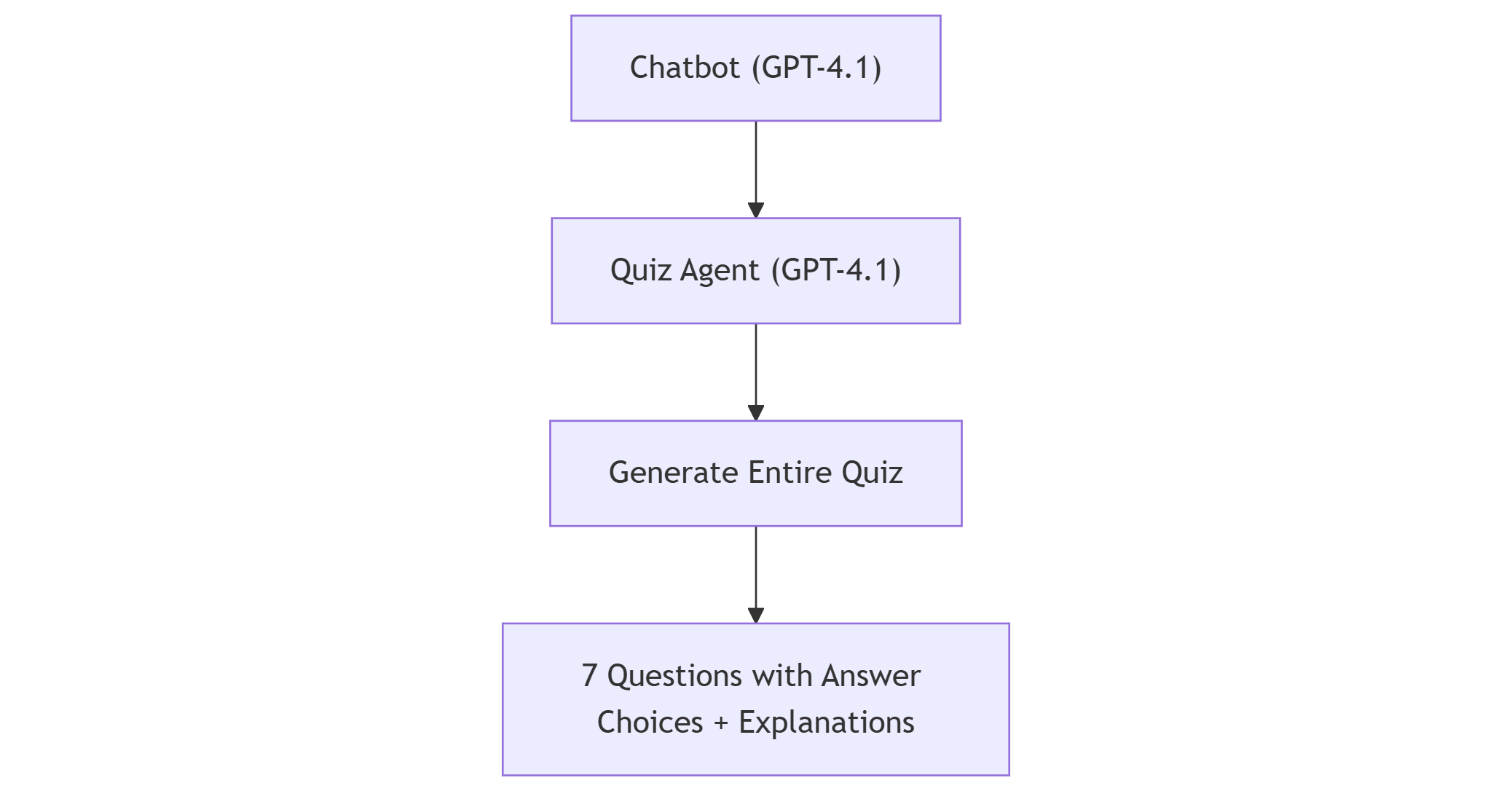

We started by using GPT-4.1, which had already proven itself as a reliable tool for generating Hindi/Urdu flashcards. It consistently delivered accurate grammar explanations and transliterations in Roman script—something essential for learners who can't yet read the native scripts.

However, quiz generation presented a new challenge. Each quiz included seven questions, and each question came with:

- - A full prompt

- - Four answer choices

- - An explanation for the correct answer

Multiply that by seven, and the token count ballooned fast. Adding to the issue was the structure of the quiz generation tool itself. Our system prompt was verbose—containing detailed instructions for multiple types of questions (fill-in-the-blank, translation, grammar, etc.)—which gave the model too much freedom. This led to:

Our Solution: Structured Outputs + Gemini 1.5 Flash

To address both cost and quality concerns, we made two key changes:

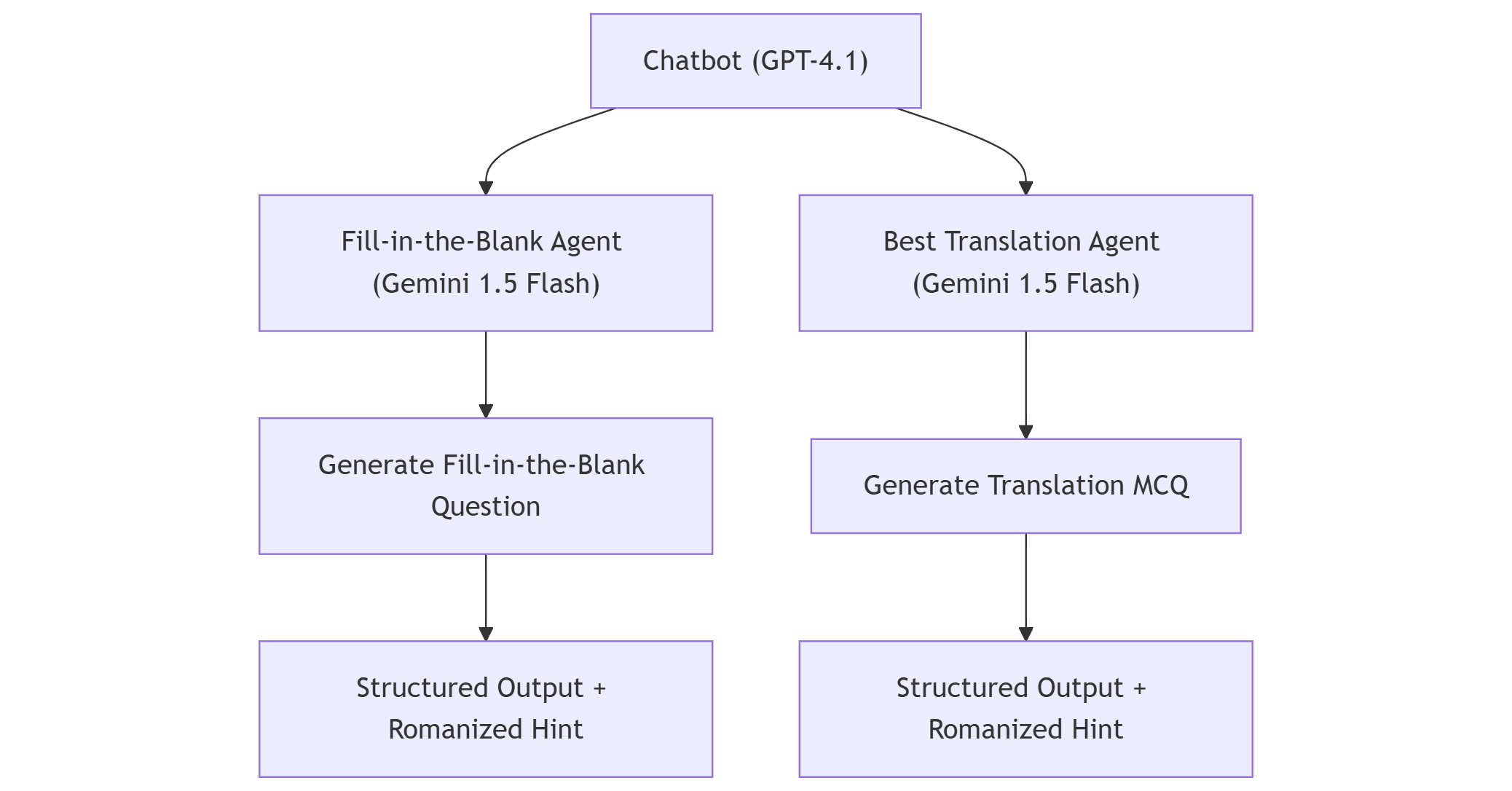

1. Modularized Question Generation with Structured Output Schemas

Instead of asking the LLM to generate an entire quiz in one go, we split the process into generating one type of question at a time using structured output tools. Each question type—like fill-in-the-blank or best English translation—now has its own dedicated tool and system prompt.

2. Switching to Gemini 1.5 Flash

We migrated from GPT-4.1 to Gemini 1.5 Flash, which offers a generous free tier and much lower costs per token. This allowed us to add few-shot prompting with good/bad examples to improve output quality without worrying about costs spiraling out of control.

Better Quizzes, Lower Costs, and a Scalable System

These changes had multiple benefits:

- Consistent Output: With per-question tools and structured schemas, we could implement strong guardrails and validate quality.

- Scalability: Question generation became modular and asynchronous—usable outside the chat interface if needed.

- Accessibility: Gemini 1.5 Flash proved reliable in producing Hindi and Urdu output with accurate Romanized transliterations, critical for learners not yet fluent in reading native scripts.

- Affordability: Detailed prompting and high-quality outputs are now viable thanks to Gemini's pricing model.

What's Next

We're excited by the results: high-quality quizzes, lower costs, and a flexible system we can continue to improve. In future posts, we'll share more about our multi-agent architecture and more!